Insight

How to Choose the Best Data Annotation Type for Your ML Project

Caroline Lasorsa

Product Marketing | 2022/05/25 | 6 min read

Building a machine learning and computer vision model is no short of cumbersome for any team, especially if it's the first go-around. And knowing where to start and which labeling types to use is half the battle. Your approach is entirely dependent on the level of detail your project requires, budget, volume, and time constraints. It also relies heavily on how precise each object needs to be. If, for example, your team is training a model to identify specific cellular abnormalities in at-risk cancer patients, bounding boxes simply won’t do. On the other hand, if your team is trying to locate everyday objects in a residential neighborhood, bounding boxes work just fine. Establishing the end goal of your machine learning project is the first step in determining which labeling method to use. But how do we do that?

If this isn’t your first rodeo in the data labeling world, then you’re all too familiar with what it takes to label, build, and refine a machine learning model. On the other hand, if you’re a rookie, then it takes knowing a few things before forging ahead, most notably, which labeling type works best for your project.

Things to Consider:

1. Time

Building your computer vision model can take thousands of hours of both manual and automated labeling techniques in order to get it right. Determining how much time you have to build out your model, the allotted time needed for testing, and of course, if your model needs frequent updating are all things to consider.

2. Budget

Some teams, such as those part of FAANG, are gifted with unlimited budgets and resources, meaning that they can invest in a large workforce, utilize outside tools, and outsource manual labeling teams. Other teams are much smaller, lack financial flexibility, and are restricted with how much they can spend on labeling platforms.

3. Project Goals

Establishing what you’re looking to accomplish can help you figure out which approach you should take. Assessing the level of detail needed as well as determining the desired end result can help your team move in the right direction.

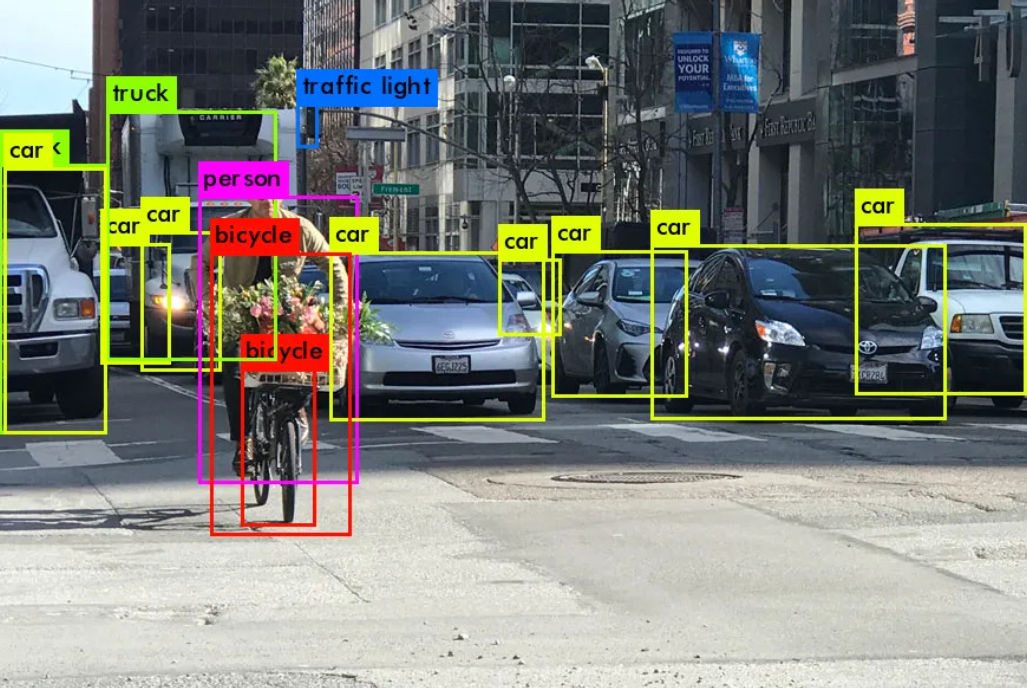

Bounding Boxes

Perhaps the easiest labeling type available is bounding boxes. Here, an annotator uses a drawing tool to outline a specific object with a box, being careful to include only that object and to hug its edges. Bounding boxes are an excellent tool for classification tasks and those requiring little detail, such as object localization. Here, it’s important to consider how much detail is required for your model. This is the recommended route if you’re just looking to identify an object as a whole rather than its specific outline.

Real-World Applications

As a rule of thumb, certain tasks call for specific labeling types. Bounding boxes allow teams to quickly annotate everyday objects and are commonly used across the retail, manufacturing, and robotics industries. For example, retailers often implement security measures at their self-checkout stations, such as cameras to detect shoplifters. That camera footage can easily identify the objects being taken by patrons after learning how to recognize them through machine learning models. Each object will have had to be annotated thousands of times, most likely with bounding boxes. Furthermore, robots trained to restock shelves also need to understand the objects being placed in each aisle. The same applies to the previous application; these robots will need to recognize each item and where it goes on the shelf, and bounding boxes work best.

In addition, bounding boxes are applied to everyday consumer use cases, many of which we wouldn’t give a second thought. For example, drones have grown in popularity for tech enthusiasts and professional photographers alike. We’ve grown accustomed to high-definition footage of our favorite sports matches, much of which are filmed overhead and controlled by people. But drones can also fly on their own accord due to advancements in machine learning. To do so, drones require the ability to recognize everyday objects such as people, trees, or birds and therefore dodge them. Using bounding boxes, a drone model can be trained to recognize thousands of everyday objects and avoid collisions.

Polygon Annotation

For teams looking to build complex machine learning and computer vision models where meticulous detail is required, simple annotation techniques like bounding boxes won’t cut it. Instead, their project likely calls for intricate labeling methods like polygon annotation. In this technique, a labeler is able to draw a pixel-perfect outline of an object’s shape. It bodes well for irregularly shaped items and cuts out extraneous information by ensuring that only that object is included in the annotation. Polygons are necessary when teams need to recognize a specific object and monitor only what’s included in the annotation, taking into consideration finite details.

Use Cases and Examples

Polygon annotation is commonly found in the scientific community and applied to medical use cases. For example, a model trained to recognize and diagnose a specific disease or abnormality will need to hone in on a very precise facet of imagery. Simply outlining a tumor, for instance, with a bounding box includes far too much unnecessary information, but zooming in on just that piece of a medical scan allows for a model to easily identify it as abnormal or obscure. While proving advantageous for heavily precise imagery, it also requires a lot of time and dedication. This can be a major drawback for smaller teams with limited budgets or resources. However, the amount of time and labor saved over time can far exceed the hurdle presented by labeling.

Likewise, polygon annotation is also necessary in agricultural use cases. Those in AgTech, or agriculture technology, are looking to use data to make more informed decisions in the farming space. This can include disease detection in plants, monitoring rainfall and drought, and tracking growth patterns, to name a few. Agricultural machine learning applications tend to account for several different scenarios that happen in nature, such as the presence of pests and the level of ripeness. Because of this, a model trained to work with farming use cases must adapt to many changing variables that are beyond our control, which is why they require such high levels of precision.

Polylines

Easily utilize Superb AI's computer vision tools for polyline annotation.

A popular topic in the AI space is that of autonomous vehicles. It is perhaps the first thing you think of when artificial intelligence is mentioned. And for good reason. The idea of self-driving cars has topped the news headlines, both good and bad, and is becoming more of a reality with each technological advancement. But how do these cars know where to go or how to stay in their lane at the appropriate speed? Through machine learning and computer vision, ML engineers have trained their models to behave as if there are humans behind the wheel. And much of what that training entails is understanding roadways and various traffic symbols. As one can imagine, an autonomous vehicle needs to comprehend lanes, stop lines, and when to pass. So it makes sense here to use polylines, an annotation method used when objects are linear.

When building out a polyline dataset, an annotator will trace a series of straight lines to outline the direction of a roadway or pathway. A car will need to understand the difference between a one-way street and a stop line, or when it can pass and when it can’t. There are several nuances to driving that people know by experience and from passing their driver’s tests. Conversely, a self-driving vehicle must learn this through hundreds of thousands of annotated images. What’s more, traffic rules vary across different countries, so it’s especially paramount that a model is able to decipher and make informed decisions based on these different scenarios. Utilizing polylines allows for an easy way to trace important roadways and lines without including too much detail.

Keypoints

Keypoint annotation for computer vision

In addition to polylines, keypoint annotation also looks to label an object using a line structure rather than a detailed outline of an image or a simple box. Here, an annotator’s goal is to make a skeletal outline of an object, which is annotated at major points of movement. For example, a machine learning model designed to understand a person’s exercise form will look for major joints in the body. Conversely, face detection technology is trained to recognize significant points in the face, such as the eyes, nose, and mouth. Keypoints allow for easy annotation that focuses on areas of interest, omitting insignificant information.

Use Cases

As stated above, keypoint annotation focuses on major intersections in the body, such as joints or facial features. This makes it the go-to strategy for ML professionals building movement detection technology. New at-home workout machines such as Tonal and Mirror can detect a person’s form in real-time and suggest corrections, therefore promoting healthy workout practices and preventing injuries. Movement detection goes beyond these very specific and niche use cases, however. In the medical field, for example, doctors and scientists are studying gait analysis and measurements to monitor and treat various diseases. In sports, professional scouts are analyzing a player’s movement to predict future performance and make draft decisions.

Keypoint applications are used every day when we unlock our phones just by looking at the screen. Fun filters in programs like Snapchat and Instagram are built on the same technology, and security applications are trained to recognize the faces of criminals wanted by the law. By annotating only specific parts of the face or body, ML models can concentrate only on those important aspects. Unlike other labeling techniques such as polygons, the unimportant information is omitted. This makes for an ideal strategy for these scenarios and use cases.

The Takeaway

In the vast field of machine learning and computer vision, it’s paramount to understand how each project and application must be approached. Agricultural use cases with a goal of monitoring plant health and disease detection will need heavily detailed labels and will therefore need to utilize polygon segmentation. On the other hand, self-driving cars will need to understand the road outlines, their boundaries, and their meanings through polylines. Simple classification problems can look to bounding boxes, and movement detection and facial recognition software can look to keypoints.

Determining the end goal of your project will ultimately help you understand which annotation type will yield the best results. From there, you will be able to decide which tools to use, who to appoint to your team, and how to train your model. At Superb AI, we’ve built a comprehensive data labeling suite designed to simplify annotation. For more information, please visit https://www.superb-ai.com/contact to schedule a call with our sales team.

Related Posts

Insight

CVPR 2025 Foundation Few-Shot Object Detection Challenge: Transforming Future Industries with AI

Hyun Kim

Co-Founder & CEO | 10 min read

Insight

How Can Vision AI Recognize What It Has Never Seen Before? LVIS and the Future of Object Detection

Hyun Kim

Co-Founder & CEO | 15 min read

Insight

Interactive AI in the Field? Exploring Multi-Prompt Technology

Tyler McKean

Head of Customer Success | 15 min read

About Superb AI

Superb AI is an enterprise-level training data platform that is reinventing the way ML teams manage and deliver training data within organizations. Launched in 2018, the Superb AI Suite provides a unique blend of automation, collaboration and plug-and-play modularity, helping teams drastically reduce the time it takes to prepare high quality training datasets. If you want to experience the transformation, sign up for free today.