The typical ML lifecycle consists of three pieces: (1) Data Preparation (data collection, data storage, data augmentation, data labeling, data validation, feature selection), (2) Model Development (hyper-parameter tuning, model selection, model training, model testing, model validation), and (3) Model Deployment (model inference, model monitoring, model maintenance). It appears that the deficit of attention from experts to one of the most critical areas of the ML lifecycle — that of data preparation — is the likely cause for a still highly dysfunctional ML lifecycle.

While general wisdom acknowledges that high-quality training data is necessary to build better models, the lack of a formal definition of a good dataset — or rather, the right dataset for the task — is the main bottleneck impeding the universal adoption of AI. This entails asking questions such as: What data? Where to find that data? How much data? Where to validate that data? What defines quality? Where to store that data? How to organize that data?

Jennifer Prendki - the founder and CEO of Alectio - stopped by the Datacast podcast to talk about the idea of DataPrepOps and how to do it well. Jennifer’s direct experience going through this process at organizations like Walmart Labs, Atlassian, and Figure Eight provided some valuable insights. Listen to the episode below, or scroll down to read the highlights.

Getting Into Data Science

Back in her finance career, Jennifer often worked with Black Scholes equation or time series models. She felt the urge to work on more sophisticated modeling methods. Around 2014, data science started to become popular. The big tech companies started to put more emphasis on their data initiatives.

After her time at Quantlab Financial, she tried to move to places where she could do things from the beginning. She decided to go to YuMe since they recently acquired a company whose CEO is John Wainwright, the first customer of Amazon. John also pioneered pure object-based computer languages, which serve as the core behind game development and 3D animation technologies used today. For Jennifer, the opportunity to work with John was more than any potential salary or benefits. However, by the time she joined YuMe, John has resigned.

She ended up in a weird situation where she didn’t know exactly what the company wanted her to work on as a data scientist. She was forced into (almost) a managerial position making high-stake decisions for the company when she barely knew about data science myself. Jennifer quickly realized that she enjoyed this a lot; for instance, she was very efficient in communicating with engineering teams. She figured that what she was really good at was more data strategy than model development.

YuMe gave Jennifer many opportunities that a manager has, but they didn’t give her the budget. It took a bit of time for somebody to trust her completely. She learned back in those days that if it’s not the right place for you, you shouldn’t be afraid to move. For her, it’s crucial never to lose the trust of what she needs for her career.

“If I’m not with the right people or in the right environment, I shouldn’t be afraid to make the decision that enables me to grow.”

Measuring Data Science ROI

When Jennifer joined Walmart Labs, she started in an individual contributor role as a principal data scientist. Later on, the muscle she wanted to exercise more was working on more large-scale initiatives (which would be easier in a big company).

During this period, a typical data science team comprised of people with Ph.D. degrees researching model development. However, many companies were struggling to convert those models into something that actually made money. There was no communication between the business and research sides in such a large company (like Walmart). The data scientists were completely cut off from the business goals.

“To become an effective data scientist, you need to understand what the C-suite people want to achieve.”

Inside the Metrics-Measurements-Insights team that Jennifer managed, they talked to all the stakeholders in different teams, identified ways to measure success for them, found proxies for different measurements, and communicated whether we were moving the needle for them. You have to keep in mind that a company that started a data science team wants to see the ROI. If you are here building models that do not help people, there’s a chance that you would get laid off. Thus, companies must have this sort of initiative.

For Jennifer, it’s never too early for anyone who wants to enter the data space to understand that: Data is at the service of the business. Companies invest in big data initiatives to sell more products, attract more customers, make things easier, etc. If you don’t keep in mind that there is a business goal at the end of the day, you’re going to fail.

Pioneering Active Learning

The traditional way of building an ML model is supervised learning: given an acquired dataset, you annotate it and use it for your model. Active learning is basically a specialized way of doing semi-supervised learning. In semi-supervised learning, you work with some annotated data and some un-annotated data. In active learning, you prioritize strategic pieces of data by going back and forth between training and inference. You take a small set of data, annotate this data, train your model with this data, see how well the model performs, and think about what piece of data to focus on next.

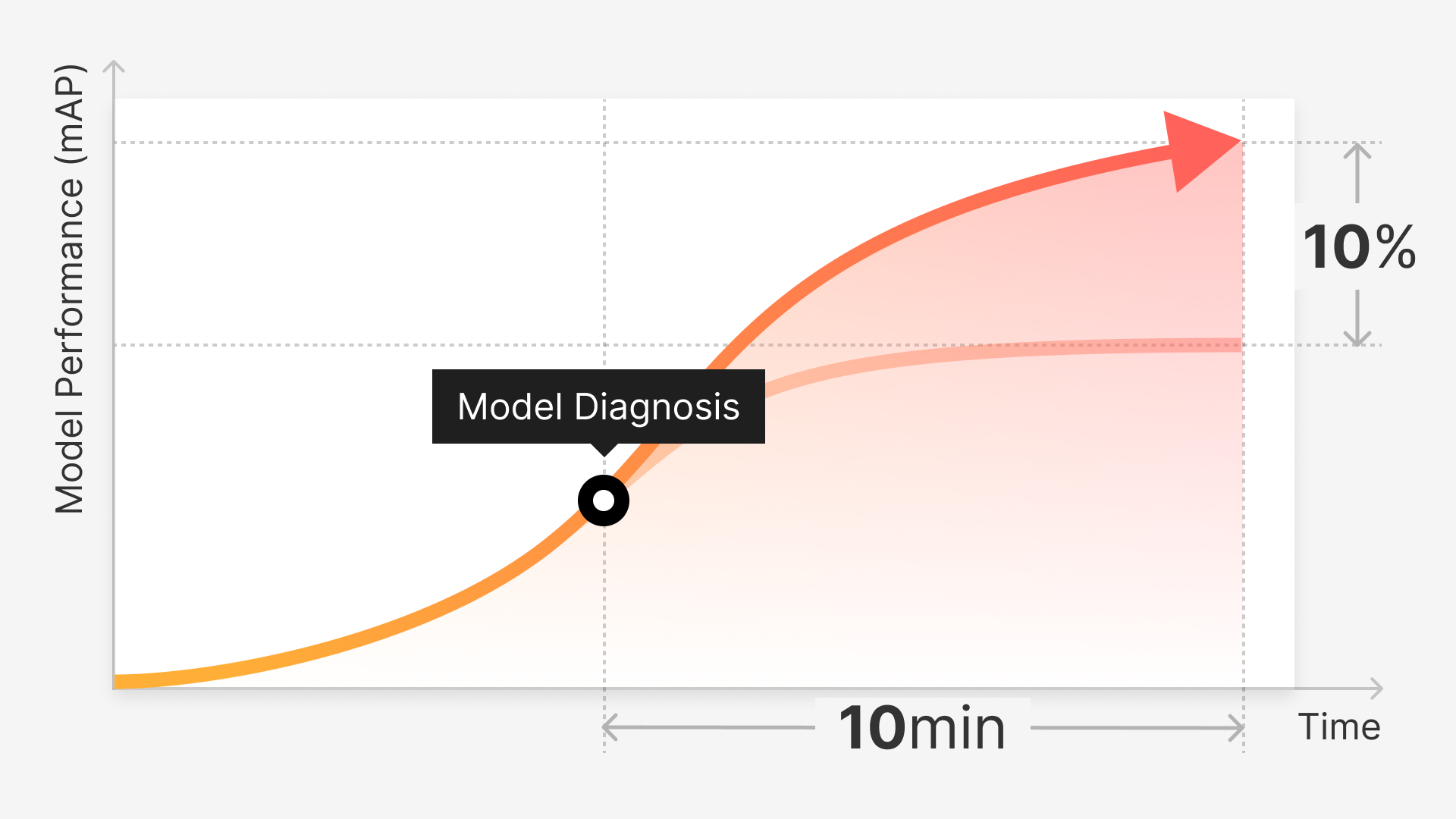

A popular way of doing active learning is to look at some measurement of model uncertainty. You train your model with a little bit of data and perform inference on the rest of the dataset (which is not labeled yet). You can say: “It seems that the model is relatively sure that it makes the right predictions on these classes of data, so I won’t need to focus on them.”

Note: Watch Jennifer’s talk Introduction to Active Learning at ODSC London 2018

Jennifer started using active learning at Walmart Labs because of our ridiculously small labeling budget. Furthermore, she realized that a big problem with regular active learning is that it needs to be tuned. Active learning is the principle of doing things in batches (or loops), but practitioners still don’t know quite yet how to pick the right number of batches smartly.

She saw an analogy between active learning today with deep learning 10 years ago.

When she started her career, deep learning had started to become popular, but not quite at its peak yet. Many people gave it a try and thought that deep learning did not work. It requires a lot of expertise to choose the right number of epochs, number of neurons, batch size, etc. If you don’t do that accurately, you can fail miserably.

The same happens with active learning. If you have the wrong querying strategy (picking the next batch), you can end a situation where your model is way worse than it could have been with the entire dataset.

Another challenge with active learning is that it is compute-greedy. Although it saves you on the labeling cost, you have to retrain your model regularly. Every time you have to restart from scratch, the relationship between the amount of data and compute is N². Given this tradeoff, you have to see whether it makes sense to waste compute for the sake of saving labels.

Being Bullish on DataPrepOps

Answering the question, “What does it take to be successful with ML as an organization?”, Jennifer outlined 3 components: the technology, the organization, and the operation. Today, the industry is good at technology. However, it’s evident everywhere she has been that we suck at the organizational and operational aspects. She has advised a lot of small and large companies alike. Nobody got it right.

The organizational part entails understanding how ML people interact with their colleagues and setting up a data culture. Unfortunately, we still live in a world where the C-suite execs don’t know how and what to do with the data collected.

The operational part mostly includes brute-force approaches to get MVP solutions everywhere. MVPs are cool, but they are often inefficient and too expensive to prototype. She believes we can’t win with ML if we don’t get MLOps right. The good news is that over the last 18 months, there have been many companies popping up and helping practitioners with various tasks of the ML lifecycle development.

When we think about the ML lifecycle, we have data preparation (getting data into the right shape), model development (by default, what people think of when it comes to ML), and model deployment (putting models into the real world). There have been many investments from the VC community in model development and model deployment tools.

“The one thing that has not been operationalized properly thus yet is the data preparation piece.”

Asking any data scientists out there how they spend their time, they will say 75–80% of the time on feature engineering, data labeling, data cleaning, etc. Jennifer believes that there are not enough investments and companies bothered about data preparation. It’s also worth noting that data preparation goes beyond data labeling and data storage.

Founding Alectio

Jennifer considered herself a reluctant entrepreneur. Her original thesis is that we should stop believing big data is the only solution for building better ML systems. She tried to evangelize this thesis at all of her previous employers. Eventually, she realized that nobody was tackling this huge problem. The concept of “Less Is More” is popular in our society, but not so much in big data. There’s no doubt that big data unleashes real opportunities for ML. However, we are currently facing the reverse problem: we build bigger data centers and faster machines to deal with the massive amount of data. To me, this was not the wise approach. From an economic perspective, you can easily understand why some large companies have the incentive to make everybody believe that big data is necessary — the more data, the more money they will make.

Source: https://alectio.com/product/

She thinks about the sustainability of ML in two ways: (1) sustainable environments (fewer data centers and less electricity used for servers) and (2) sustainable initiatives from large organizations. Many problems have come from the scale of data that we need to tame. Any dataset is made up of useful, useless, and harmful data:

The useful data is what you want. It contains the information that helps your models.

The useless data is not good nor bad. It wastes your time and money (typically representing a big chunk of your dataset).

The harmful data is the worst piece. You store and train models on it but getting worse performance.

“For me, ML 2.0 is about demanding higher quality from the data.“

However, there is a distinction between the quality and the value of the data. Value depends on the use cases. Data that might be useful for model AI won’t necessarily be useful for model B. Thus, you need to perform data management in the context of what you are trying to achieve with it. None of the data management companies are doing this today.

Alectio’s mission is to urge people to tame down the data and come back to sanity to some extent.

Advocating for Responsible AI

Jennifer believes that delivering fair AI means that everybody has access to the same technology and benefits from the technology in the same way.

“We want AI to be the solution to the unfair society that we live in.”

One thing that scares her with the progress in AI is the disappearance of blue-collar jobs. This is not a bad thing. We want people to move on to different jobs that are not dangerous. However, if we continue our current path, the rich get richer, and the poor get poorer.

An incredible example of this is data labeling: When a rich startup or a large company in Silicon Valley wants to build ML models, they need to label their data. They will rely on labeling companies. These companies rely on actual people who do that job. Most of these labelers are based in third-world nations like Kenya, Madagascar, Indonesia, the Philippines, etc. There are instances where these companies took a large chunk of the money and did not compensate these labelers fairly. In some cases, they are put in a slavery position, forced to label without proper salary.

There’s a huge opportunity to have poor people in those countries benefit from the AI economy via data labeling. But we need to ensure that we do not increase social disparity because of AI. I think there must be regulations in terms of how much they get paid.

Note: Watch Jennifer’s keynote talk The Importance of Ethics in Data Science at the Women in Analytics Conference 2019

Check out the full episode of Datacast to hear more valuable insights from Jennifer—including her educational background in Physics, how to bring the concept of agile to data science teams, and her advice for women entering the industry.