Product

Validate labels at scale: Introducing Manual QA and a more intuitive platform interface

2021/09/02 | 6 min read

Your data labeling workhorse - now with reimagined label validation capabilities and a fresh new look

Introduction

If you build machine learning (ML) systems in the real world, then you know how crucial it is to have high-quality labeled training data. High-quality labeled data empower ML teams to develop powerful ML systems quickly and improve the performance of those systems once they are put into production. But truly high-quality labeled data is hard to come by. The data labeling process includes a wide variety of tasks such as using tools to collect and enrich data, performing quality assurance for the labels, managing data labelers, iterating the process until data is delivered into actual models, and even after model inference for model optimization.

In particular, validating label accuracy is vital to ensuring overall label quality.

• Accuracy measures how close the labeling is to ground-truth or how well the labeled features in the data are consistent with real-world conditions.

• Quality is about label accuracy across the overall dataset. Does the work of all labelers look the same? Is labeling consistently accurate across the datasets? This is relevant no matter how many labelers are working at the same time.

Validation is essential because every labeler is different. It’s almost impossible to train everyone to be 100% accurate at the beginning of a project, and labelers are humans (meaning mistakes will always occur, at least occasionally). These mistakes can quickly diminish the precision and value of the resulting model predictions. However, while necessary, label validation can be time-consuming when done in an ad-hoc manner (some managers report spending as much as 50% of their time validating labels). Thus it is crucial to have a well-defined and streamlined review workflow in place.

To solve these challenges, we’re excited to announce Manual Quality Assurance (QA) - a powerful new set of features built to streamline the label validation workflow so you can consistently collect high-quality labels without significant efforts. We’re also excited to unveil some big improvements we’ve made to the platform UI based on extensive research and conversations with you, our valued customers. Besides the visual changes, we’ve made it faster and easier to navigate through the platform and made the data upload process much clearer, reducing confusion and errors.

Introducing Manual QA

This release of Manual QA provides the ability for a designated expert or member of your team to (1) manually review, validate, and filter completed (submitted) labels, and (2) track the progress of your validation efforts through the existing project overview tab and new filters. Let’s unpack these activities further:

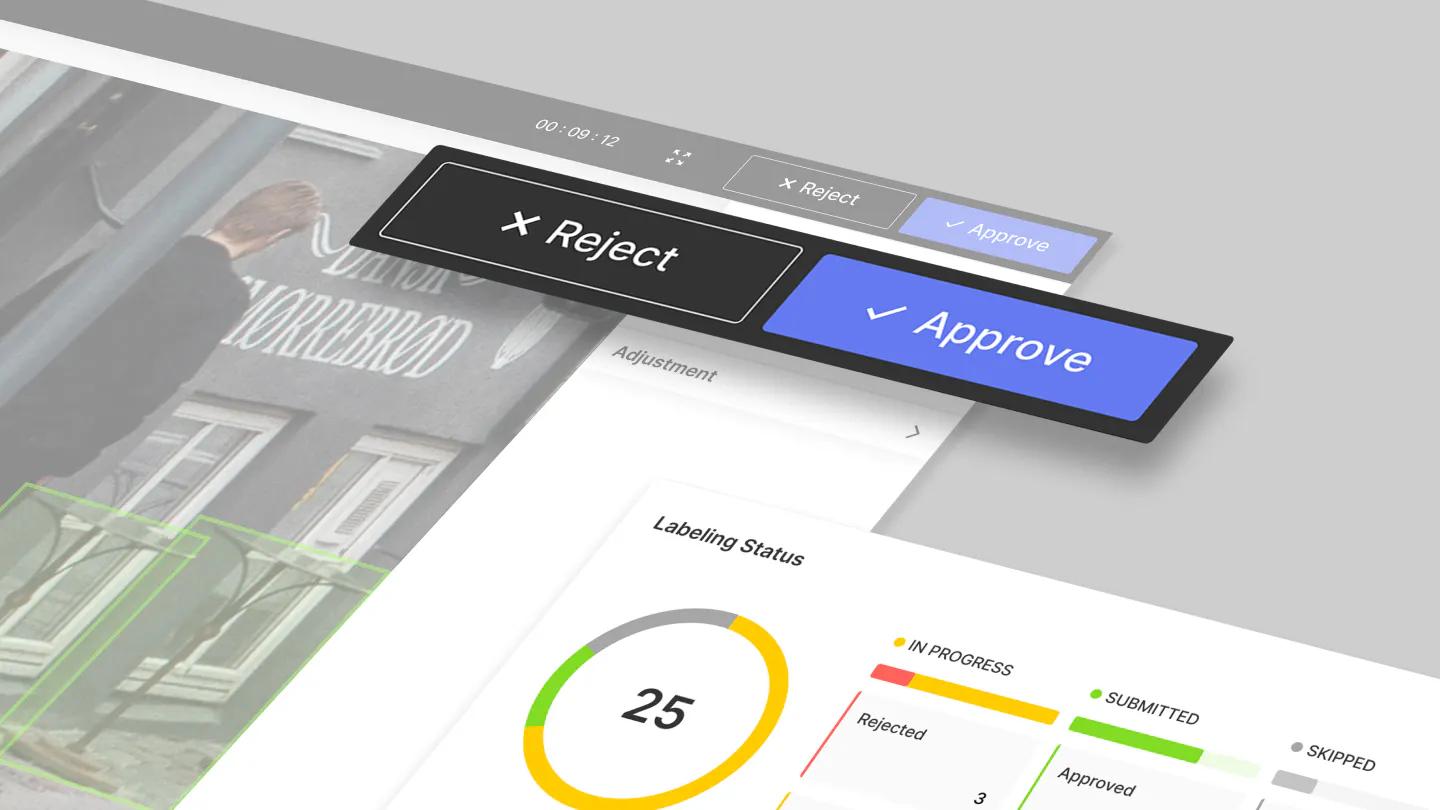

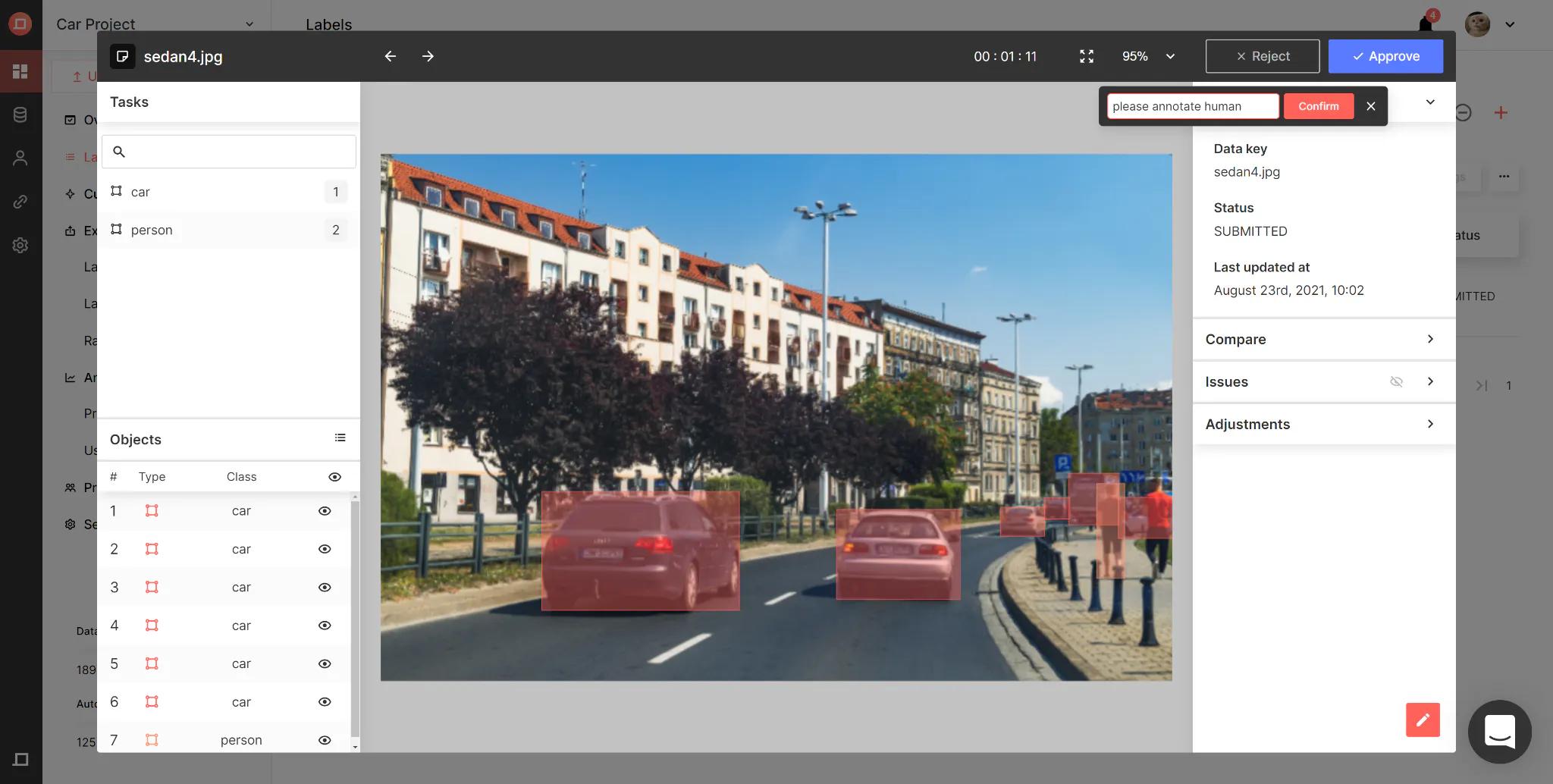

Approve or reject submitted labels: Before, your decision to approve or reject the submitted labels was purely subjective. Manual QA helps validate label quality by adding a defined approval process, which subsequently increases the visibility of errors or mistakes and therefore lessens the risk of future model quality issues.

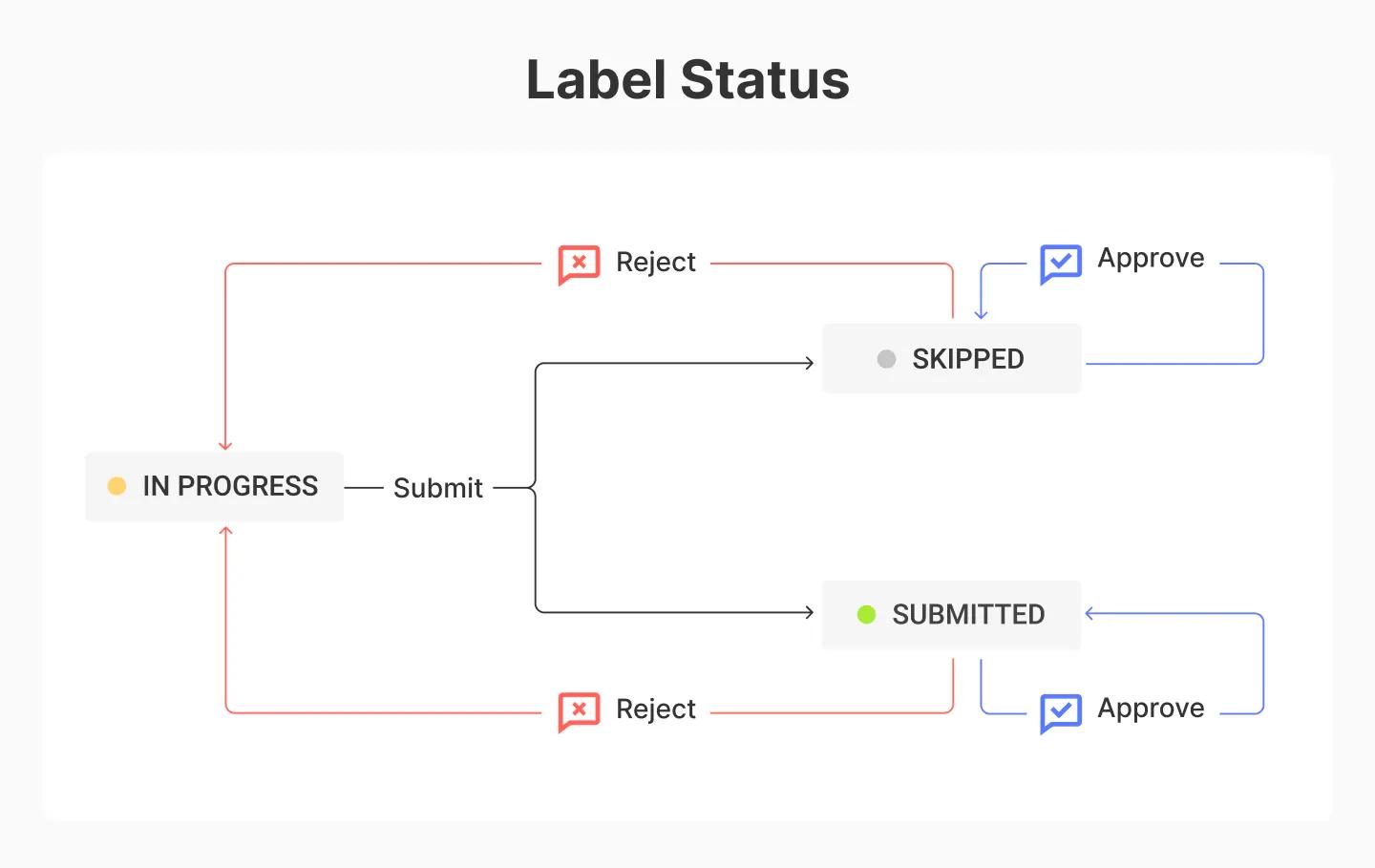

The approval process is simple - yet highly effective when used consistently. When labels are submitted or skipped from within the annotation app, or when the status is changed manually from the list of options in the label list view, the reviewer (typically a manager or other team leader) reviews the label and either approves or rejects the submission. If approved, the label is presumably good to go. If rejected, the label status is automatically changed back to in progress, and an issue thread is created. The label is then reworked by the original labeler or other team member and submitted again for review. The label either passes this time or is sent back through the cycle until the required accuracy is met.

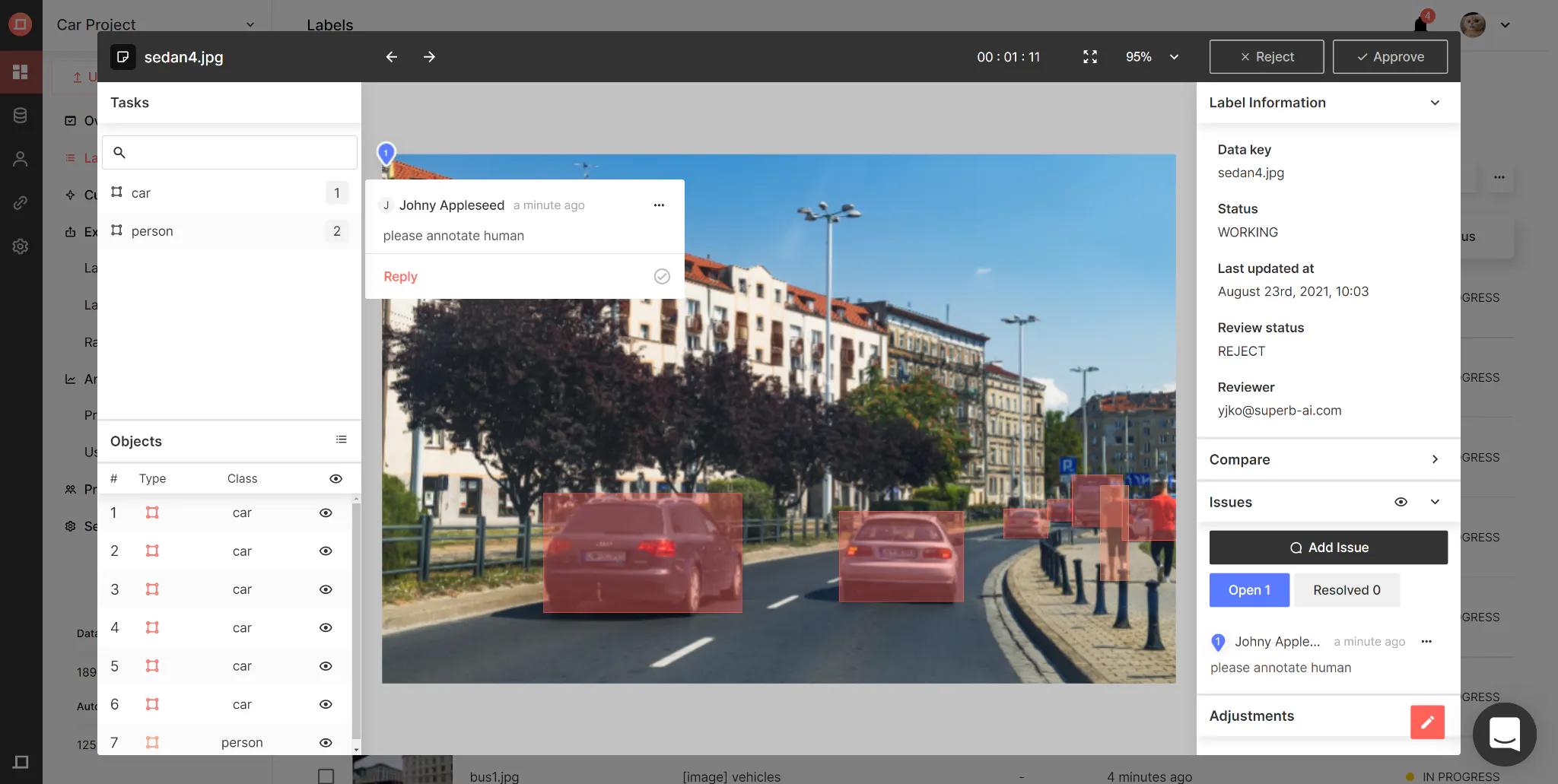

Create an issue thread for rejected labels: When labels are rejected, it can be challenging for reviewers to simultaneously diagnose the issues and convey to the labeling team the appropriate actions to take. It would be far simpler to have an interface that surfaces these issues comprehensively, enabling the reviewers to see them easily and making it quick and easy to alert labelers to the fixes needed.

Manual QA streamlines this process by requiring reviewers to input issue details whenever rejecting a label, ensuring that surfaced issues never go unrecorded. The issue tab will then display the reviewer and the issue, allowing the assigned labeler to see what needs to be reworked and why quickly, reducing time spent on resubmitting rejected labels.

Issue threads, in general, are a great feature to use both in relation to Manual QA and separately, as you can start a conversation on any piece of raw data or label, provide relevant and contextual feedback, ask for additional internal support using mentions, and more. Since you can open, resolve, and re-open multiple issue threads within a single label, it becomes effortless to track and sort out each fix that needs to be completed before the image is resubmitted for approval.

Filter the reviewed labels by review or reviewer: Having the right labels on hand is crucial in constructing high-quality training datasets. Based on our past conversations, we know many of our clients would love to have complete control over what labels they want to save.

Manual QA allows for more effective slicing of data and project management by adding filtering by review or reviewer. This functionality shines when used in conjunction with Superb’s other filters and search capabilities, as you can stack multiple filters to hone in on a specific set of data or labels.

You could, for example, filter all approved labels submitted by labeler John and reviewed by manager Terry over the last week to do a quick spot check. Or filter all rejected labels by reviewer Bryan of object class elephant over the last month to quickly review and understand the most common issues that cropped up related to elephants in your dataset. This could, for example, alert you to the fact you need to find better data for elephants as a whole if image quality is an issue!

Track review progress in the Project Overview tab: For any project manager of a data labeling team, figuring out exactly which labeler does which task can be a daunting task of its own. And it can be equally challenging for the labelers themselves to learn the responsibilities of other labelers, so they do not step into each other’s toes.

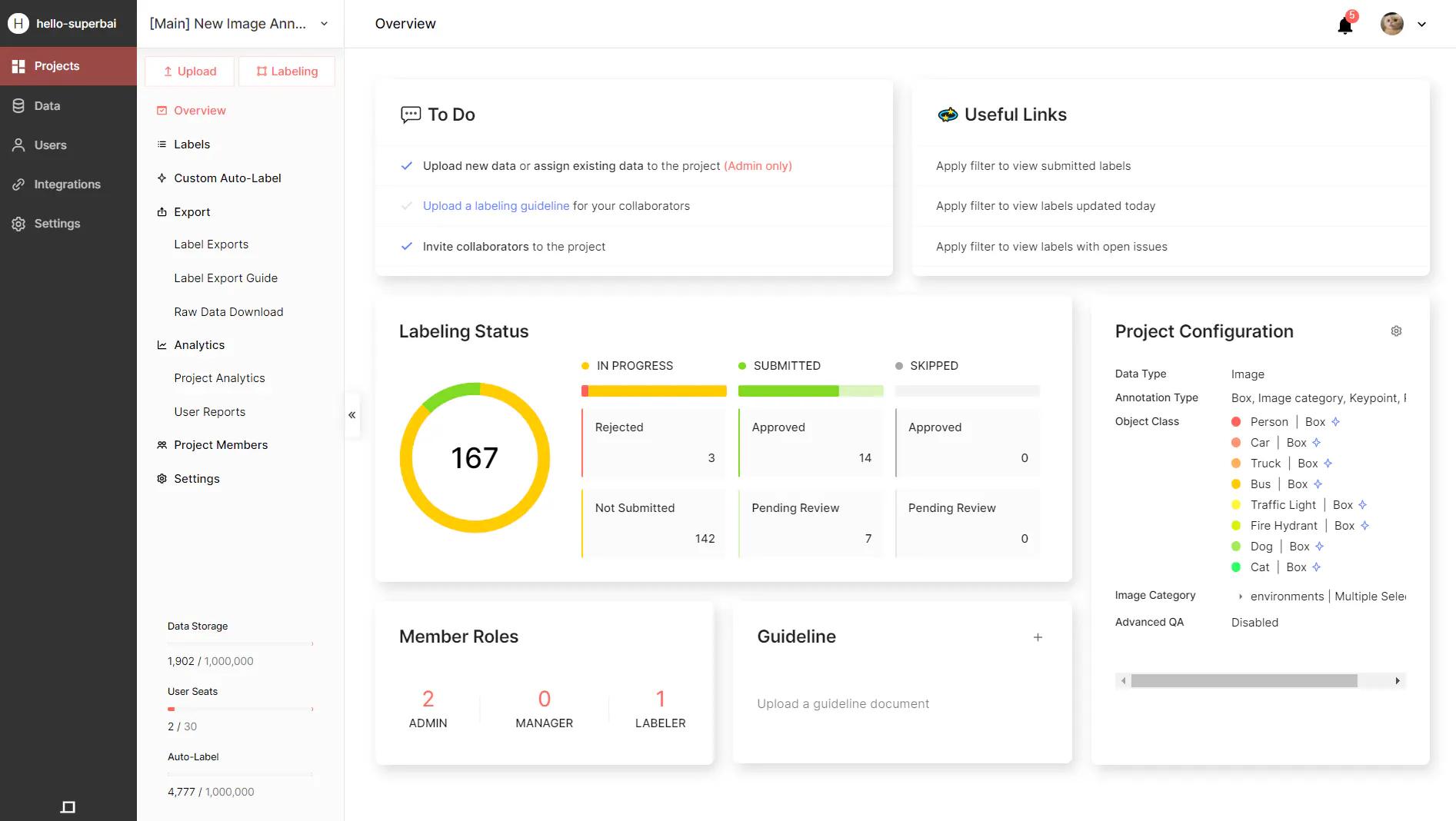

Manual QA enhances visibility into overall QA efforts with a Project Overview tab that includes review actions. The Review Chart consists of the following buckets: Rejected (labels rejected and not yet re-submitted), Pending Review (labels rejected and re-submitted by the labeler), Approved (labels got approved), and Not Reviewed (labels submitted but not yet reviewed).

An Ideal Workflow with Manual Review

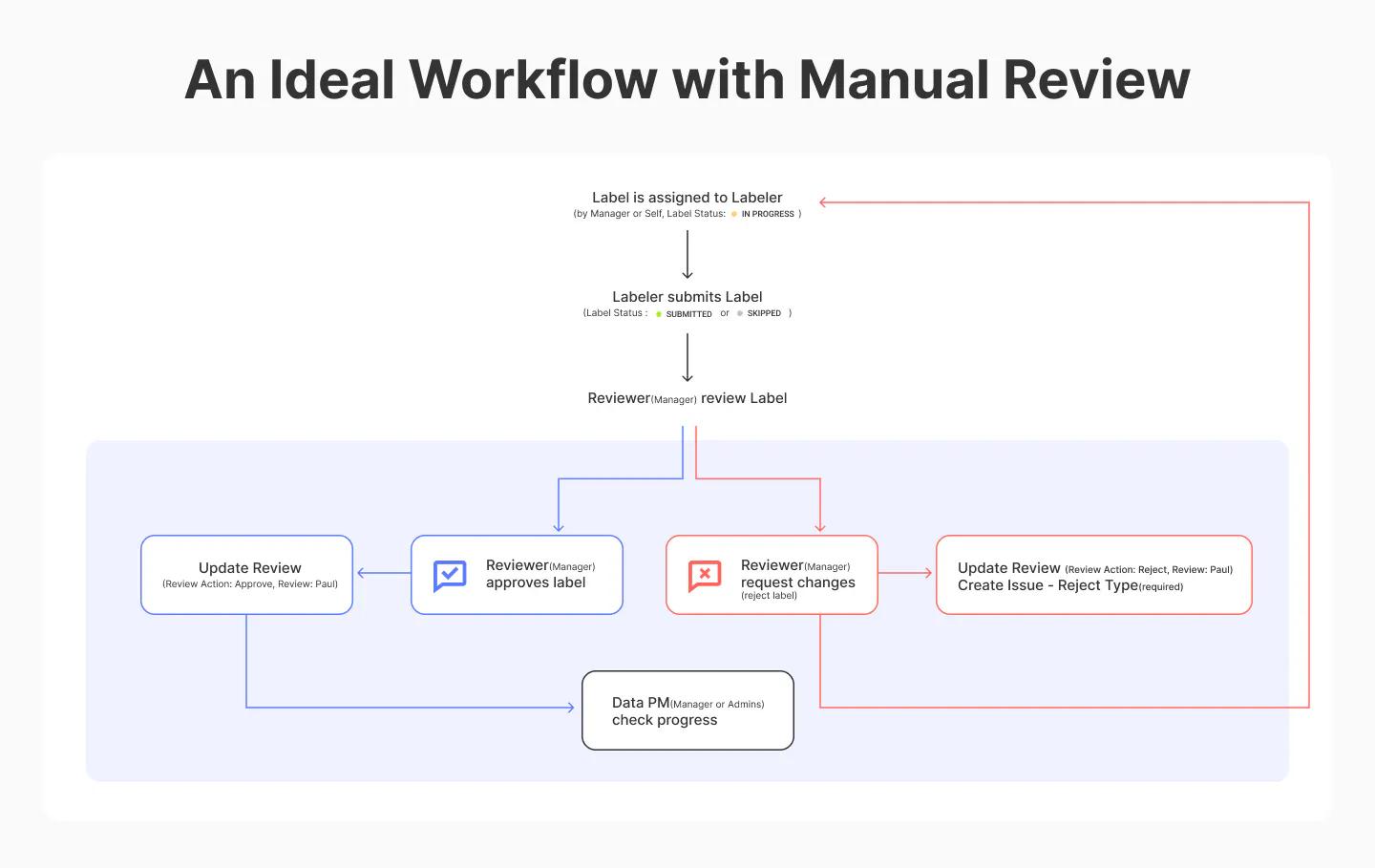

This Manual QA release fits seamlessly into the existing workflow of Suite’s three user roles: Labeler, Reviewer, and Data Project Manager (PM). These three roles will be observed via Admin/Owner/Manager and Labeler permissions within the platform :

1. A label is initially assigned to a Labeler (either by the reviewer or by the labeler himself). The label status is In Progress.

2. The Labeler either submits that label or skips that label (if it’s hard to label that data point or the image is [corrupt]). The label status is either Submitted or Skipped.

3. The Reviewer reviews the label. This is where Manual QA comes in. Using the functionalities of Manual QA mentioned in the previous section, the Reviewer can either approve or reject that label.

4. If the label is approved, the Reviewer updates the Review Action to Approve. Then, the Data PM can jump in to check project progress.

5. Else if the label is rejected, the Reviewer updates the Review Action to Reject. Then, the Reviewer creates an issue thread and asks the Labeler to submit a new label.

This workflow provides a straightforward and well-defined process for cross-checking the accuracy of your labeled data, therefore improving labeled data consistency in the long run. Additionally, it enhances project and time management by surfacing insight into what work needs to be reviewed or re-worked at the labeler, reviewer, and data project manager levels.

New and more intuitive platform interface

Over the last couple of years, we have spent a lot of time and resources building and shipping some amazing technology pieces that will soon fit together to become something even greater. Once this does happen, being able to properly deliver the full value and functionality of our platform will have to be rooted in a platform interface that is elegantly simplistic yet purposeful in nature. This is why we decided to completely overhaul the legacy interface. With a much more accessible mission control panel that prioritizes efficiency, our new interface gives you one-click access to necessary workflow steps, more intuitive decision making, and the efficiency that will be required for teams to quickly kick off projects and deliver datasets without the fuss of learning complex foreign systems.

Conclusion

Our customers have been looking for more ways to streamline and scale their data management workflows, so we’ve gone back to the drawing board and started looking for more ways to improve our Suite product, and this release is just the start. We are confident that the new Manual QA will help you label more data faster and scale up the construction of high-quality training datasets for your ML endeavors!

Related Posts

Product

How to Build & Deploy an Industrial Defect Detection Model for a Lucid Vision Labs Camera

Sam Mardirosian

Solutions Engineer | 15 min read

Product

How to Build & Deploy a Safety & Security Monitoring AI Model for an RTSP CCTV Camera

Sam Mardirosian

Solutions Engineer | 15 min read

Product

How to Use Generative AI to Properly and Effectively Augment Datasets for Better Model Performance

Tyler McKean

Head of Customer Success | 10 min read

About Superb AI

Superb AI is an enterprise-level training data platform that is reinventing the way ML teams manage and deliver training data within organizations. Launched in 2018, the Superb AI Suite provides a unique blend of automation, collaboration and plug-and-play modularity, helping teams drastically reduce the time it takes to prepare high quality training datasets. If you want to experience the transformation, sign up for free today.