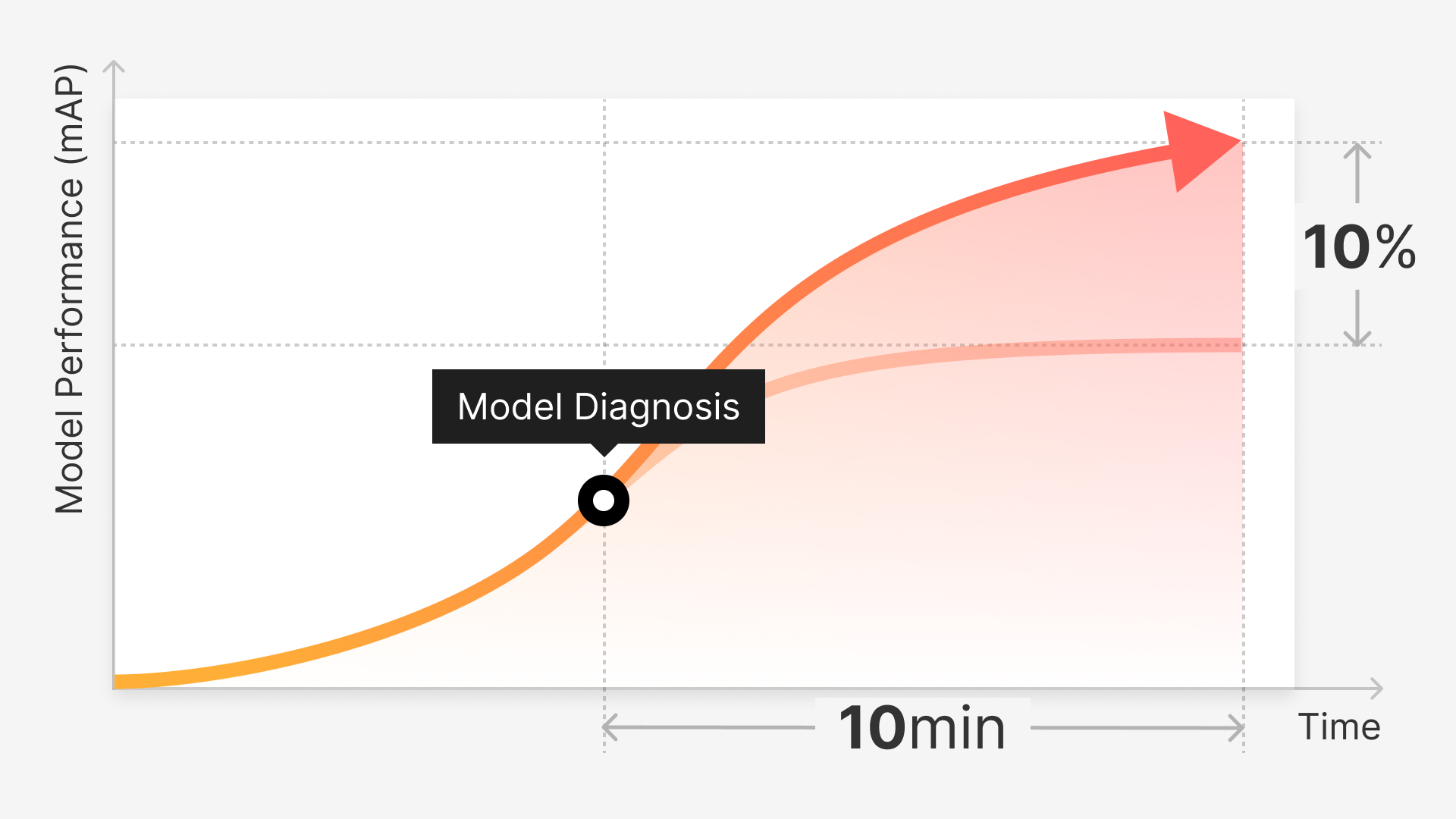

As the complex nature of computer vision model design increases, it becomes crucial to assess their performance and identify any limitations or inherent bias. Model diagnostics play a vital role in understanding the intricacies of these models and ensuring their reliability, efficiency, and interpretability. By identifying areas of improvement, developers can refine their models and achieve better performance.

The most recent advancements in computer vision technology have facilitated its widespread adoption across various sectors, transforming the way we interpret and examine visual information. With the growing reliance on these models in critical domains, it’s of utmost importance to guarantee their robustness, accuracy, and unbiased nature.

Advanced model diagnostics serve as a cornerstone in tackling these concerns, equipping developers with the essential tools and knowledge to refine their models, fine-tune hyperparameters, and ensure that AI systems produce dependable and equitable results.

Embracing advanced diagnostic approaches enables us to narrow the gap between the existing capabilities of computer vision technology and its maximum potential, ultimately fostering AI systems that exhibit greater trustworthiness, transparency, and efficacy in solving real-world problems.

1. Common Challenges in Computer Vision Diagnostics

Below we’ll be delving into the common challenges encountered in computer vision diagnostics, which significantly impact the performance and reliability of AI models.

As we explore issues such as overfitting, class imbalance, object occlusion, and background clutter and noise, we aim to develop a deeper understanding of these challenges and work towards creating more accurate and robust computer vision models.

1.1. Overfitting

Overfitting is an error that occurs when a model is considered to have learned the training data too well, capturing noise and patterns that do not generalize well to unseen data. This results in poor performance on new data and a lack of robustness.

1.2. Class Imbalance

Class imbalance is a common problem in computer vision, where certain classes are underrepresented in the training data. This can lead to biased predictions and poor performance for minority classes.

1.3. Object Occlusion and Variations

Objects in real-world images can often be partially occluded or present in various poses, sizes, and lighting conditions. These variations can pose challenges for computer vision models, leading to misclassification or false detections.

1.4. Background Clutter and Noise

Background clutter and noise in images can confuse computer vision models and affect their performance. Identifying and addressing these issues is crucial to ensure accurate predictions.

Advanced Model Diagnostic Techniques

In this section, we’ll discuss a number of advanced model diagnostic techniques for feature visualization. We’ll be examining methods like activation maps, Grad-CAM, and t-SNE, which offer valuable insights into the features learned by models and their decision-making processes. By utilizing these techniques, researchers can boost the interpretability of deep learning models, pinpoint potential biases, and fine-tune their performance.

Feature Visualization

Activation Maps: Activation maps visualize the output of specific layers in a neural network, highlighting the features that the model has learned to recognize. These maps can help identify which parts of the input image contribute most to the model's prediction.

Grad-CAM: Gradient-weighted Class Activation Mapping (Grad-CAM) is a technique that produces a heatmap highlighting the regions in the input image most relevant to the model's prediction. This method offers insights into the model's decision-making process and helps identify potential biases.

t-SNE: t-Distributed Stochastic Neighbor Embedding (t-SNE) is described as a dimensionality reduction technique that can be used to visualize high-dimensional feature spaces in two or three dimensions. By analyzing these visualizations, researchers can gain insights into the learned features and their separability.

Layer-wise Relevance Propagation (LRP): LRP is a method that decomposes the model's output into contributions from each input feature, allowing researchers to understand which features are most relevant for a specific prediction. This technique enhances the interpretability of deep learning models and helps identify potential biases.

Error Analysis

Confusion Matrices: Confusion matrices are useful tools for evaluating the performance of a computer vision model by presenting the number of correct and incorrect predictions for each class in a tabular format. Analyzing confusion matrices can help identify which classes the model is struggling with and guide developers in refining their models to address these issues.

Precision-Recall and ROC Curves: Precision-Recall and Receiver Operating Characteristic (ROC) curves are popular methods for assessing the performance of binary classifiers. These curves provide insights into the trade-offs between true positive rate (sensitivity), false positive rate (1-specificity), precision, and recall. By analyzing these curves, developers can optimize their models to achieve the best balance between these metrics.

Bounding Box Regression Diagnostics: In object detection tasks, bounding box regression diagnostics can help identify issues with the localization of objects within an image. By comparing the predicted bounding boxes to ground truth, researchers can assess the model's ability to accurately identify and localize objects, and take corrective actions if necessary.

Regularization Techniques

Dropout: Dropout is a popular regularization technique used in deep learning models to prevent overfitting. It involves randomly dropping out neurons during training, forcing the model to learn more robust features. By incorporating dropout, developers can improve the generalization capabilities of their computer vision models.

Batch Normalization: Batch normalization is a technique that normalizes the input features to each layer of a neural network, helping to stabilize the learning process and reduce the training time. It can also act as a form of regularization, potentially improving the model's performance on unseen data.

Weight Decay: Weight decay is another regularization technique that adds a penalty term to the loss function, encouraging the model to learn simpler and more interpretable features. By applying weight decay, developers can mitigate the risk of overfitting and improve the model's generalization performance.

Early Stopping: Early stopping is a regularization technique that involves stopping the training process when the model's performance on a validation set starts to degrade, preventing the model from overfitting the training data. Early stopping allows developers to find the optimal point in the training process, ensuring the best possible performance on unseen data.

Automating Model Assessment

Automated curation plays a crucial role in model assessment, as it enables developers to create well-balanced and diverse datasets for training and validation. Superb Curate is an advanced automated curation tool that offers powerful features that enables developers to extract the most valuable insights from large-scale datasets.

By incorporating queryable metadata, clustering techniques, and AI-based curation algorithms, developers can efficiently classify the features within a dataset and visualize the data for effective training and validation.

3.1. Image Curation and Clustering

Image curation leverages embedding technology to cluster images based on visual similarity. Users can toggle between tile and scatter views in the ‘Dataset page’ to visualize the clusters and adjust cluster sizes or apply filters. Queryable metadata can be used to further classify the features within a dataset, allowing for a more refined curation process.

The image curation feature ensures the dataset contains images with varied backgrounds, compositions, and angles, making it particularly useful when curating and labeling large raw datasets for the first time or when selecting data for initial Custom Auto-Label (CAL) training.

3.2. Object Curation and Queryable Metadata

Object curation balances class distribution by using annotation information and curating visually diverse data within each class. While similar to image curation, object curation takes advantage of annotation information attached to images during the curation process. Queryable metadata can also be employed in this stage to classify specific features, enhancing the curation output.

This approach is beneficial when curating meaningful labeled data for splitting into training, validation, and test sets or when image curation fails to provide the desired curated output. Users can upload their model inference results (annotations) or CAL annotations and use object detection for more sophisticated curation needs using the Superb Suite.

3.3. Auto-Curation: Sparseness, Label Noise, and Class Balance

Superb Curate's Auto-Curate feature uses an AI-based curation algorithm based on embeddings to select rare edge cases and ensure the dataset is representative. It considers four curation criteria: sparseness, label noise, class balance, and feature balance. Auto-curate selects data points located sparsely in the embedding space or rare in the dataset, reducing the risk of overfitting while improving model robustness and accuracy.

With Auto-Curate, users can curate datasets of unlabeled images with an even distribution and minimal redundancy. They can also curate only images that are rare or have a high likelihood of being edge cases or only images that are representative of the dataset and occur frequently. Auto-Curate helps to reduce the manual work of curation and ensures that machine learning teams can build more effective models with accurate and well-curated datasets.

By incorporating queryable metadata, clustering techniques, and AI-based curation algorithms into the model assessment process, Superb Curate empowers developers to streamline the creation and refinement of datasets, leading to more accurate and reliable computer vision models.

4. Applications of Advanced Model Diagnostic Techniques

Model diagnostic techniques not only aid in uncovering weaknesses and limitations of models, but also help identify and address biases, thereby ensuring more accurate and reliable predictions. Gaining insights into model behavior through advanced diagnostics contributes to better model designs and well-informed decisions when deploying AI systems in real-world applications.

4.1. Improving Model Performance

Advanced model diagnostic techniques can help developers identify weaknesses and limitations in their models, enabling them to make informed decisions on how to improve their performance. By addressing these issues, practitioners can ensure more accurate and reliable predictions in real-world applications.

4.2. Identifying and Addressing Biases

By uncovering biases and potential issues in computer vision models, advanced diagnostic techniques can help developers create more fair and unbiased models. Addressing these biases is crucial to ensuring that AI systems are equitable and do not perpetuate existing inequalities.

4.3. Gaining Insights into Model Behavior

Advanced diagnostic techniques offer insights into the decision-making processes of computer vision models, helping developers and researchers better understand their behavior. This understanding can lead to better model designs and more informed decisions when deploying AI systems in real-world applications.

As we venture into the future of advanced model diagnostics, several promising directions emerge, including the integration of unsupervised learning techniques, the development of robust model explanations, and harnessing the power of transfer learning.

These advancements will enable researchers to uncover hidden patterns, improve interpretability, and optimize pre-trained models for specific applications, ultimately resulting in more accurate and reliable computer vision models.

5.1. Incorporating Unsupervised Learning Techniques

Unsupervised learning techniques, such as clustering and dimensionality reduction, can be integrated into advanced model diagnostics to provide more robust and informative analyses. These methods can help uncover hidden patterns and structures in the data, enabling researchers to better understand the relationships between features and model performance.

5.2. Developing More Robust Model Explanations

As computer vision models continue to grow in complexity, there is a need for more robust and interpretable explanations of their decision-making processes. Future research in advanced model diagnostics should focus on developing techniques that provide clear, reliable, and easily understandable explanations for the predictions made by these models.

5.3. Harnessing the Power of Transfer Learning

Transfer learning, which involves leveraging pre-trained models for new tasks, is a powerful technique in computer vision. Advanced model diagnostics can be applied to fine-tune these pre-trained models for specific applications, helping to ensure that they perform optimally and maintain their generalization capabilities.

The Importance of Advanced Model Diagnostics

The growing complexity of computer vision models and the increasing demand for high-quality performance across various applications necessitate the use of advanced model diagnostics. These advanced diagnostics enable developers to dive deeper into the intricacies of their models, uncovering hidden patterns and identifying areas for improvement.

By analyzing the inner workings of models, developers can address issues such as overfitting, underfitting, and class imbalance while also fine-tuning hyperparameters to achieve optimal performance. Furthermore, advanced model diagnostics play a crucial role in the interpretability and explainability of AI systems.

As computer vision models become more integrated into real-world applications, such as medical diagnostics, autonomous vehicles, and facial recognition, it is essential to understand how these models arrive at their decisions.

Advanced diagnostics provide insights into the decision-making process, enabling developers to ensure that their models are making accurate and unbiased predictions. This increased transparency is not only vital for gaining the trust of end-users but also for meeting regulatory requirements and ensuring ethical AI practices.