Developing efficient and effective machine learning models poses a plethora of challenges. Of utmost importance to surmounting these hurdles is the early detection and correction of data-related issues. To this end, model diagnosis, a tool to pinpoint and rectify data problems before they impede model performance, is invaluable.

In this discussion, we shed light on how Superb AI's Curate tool is transforming model diagnosis and early data issue detection, thereby contributing significantly to the creation of balanced, high-quality datasets essential for accurate and unbiased computer vision models.

For a comprehensive understanding of challenges associated with data balance and accuracy, and how Superb Curate is addressing them, we encourage you to read our related article, "Curating for Accuracy: Building Balanced Computer Vision Datasets."

We Will Cover

Early detection of model data issues

Evaluation and training set validity

Techniques for early detection of data issues

Introduction to Superb AI’s model diagnosis tools

Automating model training and optimization

Deciphering Model Diagnostics

Model diagnostics, also known as model evaluations, are a sequence of checks and balances developed to detect and troubleshoot potential data issues, as well as suggest enhancements during various stages of machine learning model training and development.

These diagnostic tests offer contextual insights into the effectiveness of the learning algorithm and the performance of the machine learning model, clarifying what is working, what isn't, and how to bolster model performance. Diagnostic checks come in different forms, including dataset sanity checks, model checks, and leakage detection, each serving a unique purpose in the diagnostic process.

Dataset Sanity Checks: These checks ensure the integrity of the used data. They can help spot potential issues like missing values, outliers, or inconsistencies that could yield unreliable results.

Model Checks: These checks focus on the model itself. They include tests for overfitting, underfitting, and verifying the model's assumptions align with the data.

Leakage Detection: Data leakage can lead to overly optimistic performance estimates. Leakage detection checks assist in identifying any leakage, ensuring accurate assessment of the model's performance.

Performing ML Diagnostics

Contemporary data science tools enable various diagnostic tests on training and deployed models. These tests offer valuable insights into model failures and propose suitable corrective solutions to circumvent issues. For instance, data balancing ensures a balanced dataset when training neural networks, reducing learning bias. This is particularly vital in data classification tasks, where flawed data is required.

Validating Evaluation and Training Sets

For reliable machine learning model evaluation, the dataset used should accurately represent both the training and future scoring data. If the test set is too small, the model's performance estimation may be unreliable.

It's also necessary that the distribution of the target variable in test data aligns with the training data distribution. If this isn't the case, the metrics can be misleading. In such scenarios, larger test sets, a higher percentage for testing, or the use of cross-validation is advised.

Common Data Issues to Diagnose

When building computer vision models, we frequently encounter common data challenges such as class imbalance, scenario imbalance, data variability, and noise. These issues can considerably hinder model performance, accuracy, and overall efficiency.

Class imbalance is when certain classes of data are over or underrepresented, leading to skewed predictions.

Scenario imbalance occurs when some real-world scenarios are inadequately represented in the data, causing the model to underperform in those scenarios.

Data variability refers to the heterogeneity in the dataset, which can make it challenging for the model to generalize across diverse scenarios.

Noise includes both mislabeled data and outliers that can distort model learning and predictions.

The Importance of Early Detection

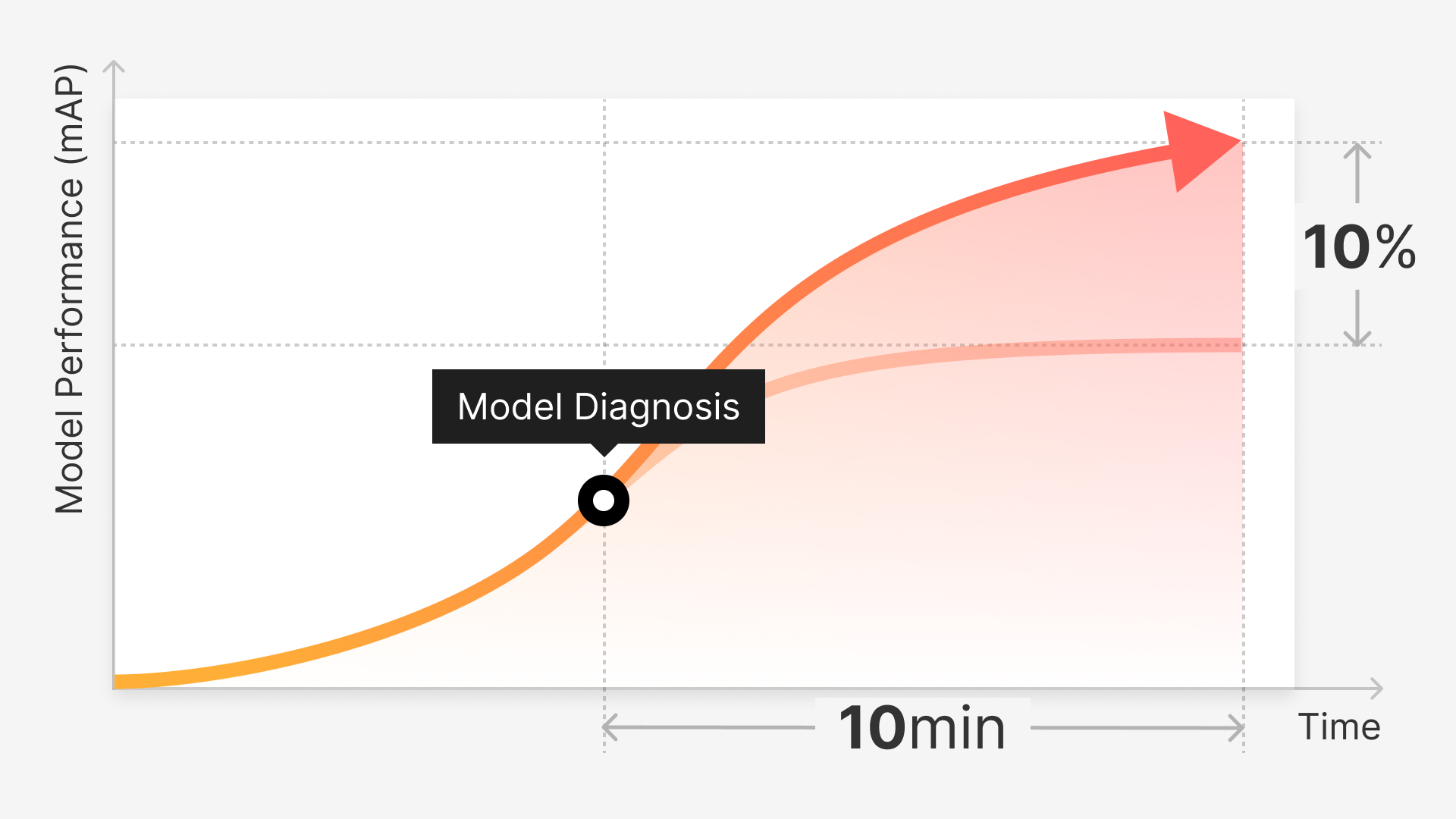

Model diagnosis is a process that seeks to identify and understand the weaknesses in a model, enabling us to correct these issues before model deployment. It plays a key role in detecting and resolving data challenges, thereby enhancing model performance and accuracy.

Early detection and resolution of data issues are, therefore, vital to creating reliable machine learning models. By validating models post-training, model diagnosis can pinpoint specific data slices where the model performs poorly, enabling us to make necessary improvements in advance.

Techniques for Early Detection of Data Issues

Model diagnosis applies specific techniques for each data challenge:

To address class imbalance, we may employ techniques like oversampling minority classes or undersampling majority classes.

Scenario imbalance can be rectified by collecting more representative data for underrepresented scenarios.

Data variability can be managed by incorporating diverse data during training to improve model generalization.

Techniques like anomaly detection can be used to identify and manage noise (outliers and mislabeled data).

Handling Class Imbalance

Class imbalance is a prevalent issue where some classes have significantly more samples than others. To tackle this, techniques such as oversampling minority classes or undersampling majority classes are employed.

Oversampling Minority Classes: This method involves adding more instances of the minority class in the dataset. The goal is to balance the number of instances between the classes and provide the model with more data to learn from the underrepresented classes.

Undersampling Majority Classes: This strategy reduces the instances of the majority class in the dataset. The goal is to prevent the model from becoming biased towards the majority class due to its higher prevalence.

1. Scenario Imbalance

Scenario imbalance occurs when certain scenarios are underrepresented in the dataset. This can lead to a model that performs poorly when faced with these underrepresented scenarios. To address this, we can collect more representative data for underrepresented scenarios. This will ensure the model is exposed to a wider variety of situations and thus improve its ability to handle them.

2. Data Variability

High data variability can impact the model's ability to generalize effectively. To handle this, diverse data should be incorporated during training. This exposes the model to a broad range of data patterns and scenarios, ultimately enhancing its ability to generalize and make accurate predictions when faced with new or unseen data.

Identifying and Handling Noise

Noise in data can come in various forms, such as outliers and mislabeled data, and can significantly impact the performance of the model.

Anomaly Detection: This technique is used to identify outliers in the dataset. These are data points that significantly deviate from the rest of the data. Once identified, these outliers can be investigated and handled appropriately.

Handling Mislabeled Data: Mislabeled data can lead to a model learning incorrect patterns. Techniques like data cleansing and manual review can be used to identify and correct these mislabeled instances.

1. Detecting Anomalies in Datasets

Superb Curate's Auto-Curate tool can be leveraged for anomaly detection. When we configure our curation algorithm to emphasize "sparseness" criteria, the tool is instructed to select cases that are further away from other data points in the embedding space. Essentially, this means that the algorithm will focus on selecting data points that are in rare positions or have a high likelihood of being edge cases.

2. Correcting Mislabels

Mislabeled data poses a serious problem in the learning process of a machine learning model. If the model is trained on incorrectly labeled data, it might learn incorrect patterns, which can significantly affect the model's performance. Correctly identifying and handling these mislabeled instances is a crucial step in ensuring the balanced performance of any model.

Auto-Curate can also play a significant role in addressing this issue. We can utilize the label noise criterion within Auto-Curate to identify potential mislabeled data. The principle is that if a data point is situated near other data points with differing labels, it's likely that the data point is mislabeled. By identifying these instances, users can correct the mislabeling errors and use the corrected data to further train the model.

Superb AI’s Solutions to Model Diagnosis

Superb AI's Curate and Model tools collectively offer an integrated solution that tackles various challenges in AI adoption, delivering a one-two punch in AI project implementation. Together, they serve as a robust tool for model diagnosis, training, and deployment, seamlessly undergoing the MLOps cycle within a single platform.

1. A Layered View of Data Faults

Visualizing image data through embeddings provides a significant advantage in the model diagnosis process. Superb AI’s Curate uses a unique approach to represent image data, employing high-dimensional embedding algorithms. These algorithms generate dimensional vectors that offer a comprehensive, easily visualizable representation of the image data.

When these vectors are collapsed, users can begin to observe data trends and patterns. This ability is crucial in diagnosing early data issues, as it facilitates efficient and effective inspection and understanding of the data, particularly in cases of high data variability.

2. Following an Active Learning Workflow

Superb AI's Curate utilizes an active learning workflow for the most efficient data labeling. This iterative process involves labeling a small subset of data, training a labeling model, using the model to generate initial labels for the next subset, correcting the automated labels, training a new model, and repeating the process.

This workflow ensures continual learning and adaptation of the model to the data, promoting accuracy in labeling. By engaging in active learning, Curate enhances the detection of data issues at the early stages of model training.

3. Early Detection With Embeddings

Embeddings are crucial in the early detection of data issues. They are particularly beneficial when dealing with large amounts of raw or unlabeled data. Superb AI’s Curate generates embeddings for this data, which users can then visualize, examine and interpret for potential issues.

The tool goes a step further to apply this concept to labeled data as well. By generating embeddings for labeled data at an object level and then clustering these objects, Curate unveils valuable insights into potential mislabeled data, thus aiding in data quality assurance.

4. Detecting Mislabels Through Clusterization

Clusterization is another valuable technique in Superb AI's toolbox. By examining instances where individual objects share the same cluster as different object classes, Curate identifies labels likely to be incorrectly annotated.

5. Data Slicing for Effective Data Management

Data slicing is a powerful feature provided by Superb AI's Auto-Curate process. This process generates slices of data for user review, which can then be further sliced or sent back to the Label segment of the Superb Suite for labeling.

This not only enhances data interpretability but also enables efficient correction for data quality. The Auto-Curate process also generates a report to accompany each run, providing transparency into the inner workings of the embedding-based data curation process.

Superb Curate's ‘Query’ feature serves as a powerful assistant in the process of slicing and reviewing data. This tool, leveraging the metadata and annotation information tied to each dataset, enables users to craft targeted conditional statements or queries. This facilitates the extraction of precise data samples that satisfy the set conditions.

6. Active Learning in Superb AI's Curate

Curate uses an active learning workflow where it continually iterates over tasks: curating the data, identifying problematic areas, suggesting potential corrections, and refining the dataset based on user input.

Model Evaluation and Diagnosis

Model diagnosis, akin to a health check-up for your models, identifies potential problems at an early stage before they snowball into significant obstacles impacting performance. Superb AI’s Curate, designed to assist with these diagnostic tasks, swiftly identifies and rectifies data issues, supporting the creation of precise, efficient, and robust models. It can detect problems ranging from class imbalances to scenario imbalances and more, allowing developers to proactively address these issues.